Most firms are experimenting, yet few are scaling

Why do most firms struggle to scale AI despite widespread experimentation? The key barriers and what sets high performers apart in agentic AI...

Why real-time data integration is crucial, replacing batch processing to meet modern business demands, enhance decision-making, and ensure compliance.

Before exploring why this shift matters, it helps to understand what these terms mean in practice.

This refers to a method where data moves between systems at scheduled intervals, typically overnight. A finance team might see yesterday's transactions only when they arrive at the office the following morning. Sales reports refresh once per day. Inventory counts update after the warehouse closes.

This shift, by contrast, processes information as events occur. When a customer places an order, inventory systems adjust immediately. When a payment clears, finance teams see it within seconds.

According to Gartner, real-time integration delivers data immediately as it is generated or received, providing users and applications with current information to enable time-sensitive decisions based on the latest available data rather than historical snapshots.

The architectural difference lies in how data flows. Batch systems collect information, hold it temporarily, then transfer it in bulk at predetermined times. Streaming architectures, the foundation of real-time integration, move data continuously through pub/sub models and event-driven processing.

Batch processing emerged as a practical solution to infrastructure constraints that no longer exist.

In the 1980s and 1990s, network bandwidth cost money, processing power was limited, and database locks during business hours would have frozen operations. Running data transfers overnight made sense. Systems could reconcile slowly without disrupting daily work. IT teams could monitor transfers during scheduled maintenance windows.

The model worked adequately when business decisions happened at similar speeds. Weekly sales meetings reviewed last week's numbers. Monthly financial closes took days to complete. Quarterly planning relied on data that was already weeks old.

For many organisations, batch processing remains operational. Legacy systems built on this architecture still function. We are not saying the systems are not working anymore, but we are asking whether they serve current business requirements.

Three developments have made batch processing inadequate for mid-market firms competing today.

Cloud computing eliminated bandwidth costs as a meaningful factor. According to Integrate.io's research on real-time data integration, the streaming analytics market was valued at $23.4 billion in 2023 and will reach $128.4 billion by 2030, growing at 28.3% annually. This growth significantly outpaces traditional data integration, reflecting how infrastructure capabilities have fundamentally changed.

Consider Walmart's approach to inventory management, documented in recent case studies on data analytics. The retailer implemented predictive analytics that integrates historical sales data, weather predictions, and consumer behaviour trends. This real-time demand forecasting allows dynamic stock level adjustments, reducing excess inventory costs while minimising stockouts. The same capability that once required enterprise-scale resources is now accessible to mid-market firms through cloud-native integration platforms.

The UAE's Personal Data Protection Law, effective since 2022 and aligned with GDPR principles, requires organisations to report data breaches to authorities within 72 hours. Batch processing that updates security logs once per day cannot meet this requirement. Financial institutions operating under Central Bank regulations face similar real-time monitoring obligations for transaction anomalies.

The shift from batch to streaming is not about technology preference. It reflects changed business conditions where delayed data creates measurable disadvantages.

The practical capabilities shown by streaming architectures extend beyond speed.

A telecommunications provider documented by MoldStud Research synchronised 1.2 billion records per hour between billing and CRM systems using microservices-based pipelines. This eliminated bottlenecks introduced by monolithic schedulers and provided instant visibility into transactional changes, allowing customer service teams to see billing updates during active calls rather than the following day.

When competitor pricing changes, inventory levels shift, or demand patterns emerge, systems can adjust immediately rather than waiting for overnight updates. For UAE-based firms competing in sectors like fintech and e-commerce, this responsiveness directly affects conversion rates.

According to a case study involving Visa, real-time outlier detection using machine learning reduced fraud by 26% compared to rule-based methods alone. Batch processing that flags suspicious transactions hours after they occur limits intervention options. Streaming architectures that analyse transactions as they happen enable immediate blocks or verification steps.

Amazon's use of instantaneous analytics to track inventory levels and customer behaviour decreased fulfilment times by 30%, according to research published by MoldStud. This capability streamlines logistics and elevates customer experience, resulting in a 25% increase in repeat purchases.

The pattern is consistent. Real-time data access changes what becomes possible, not just how quickly information moves.

Adopting streaming architectures requires more than replacing one technology with another.

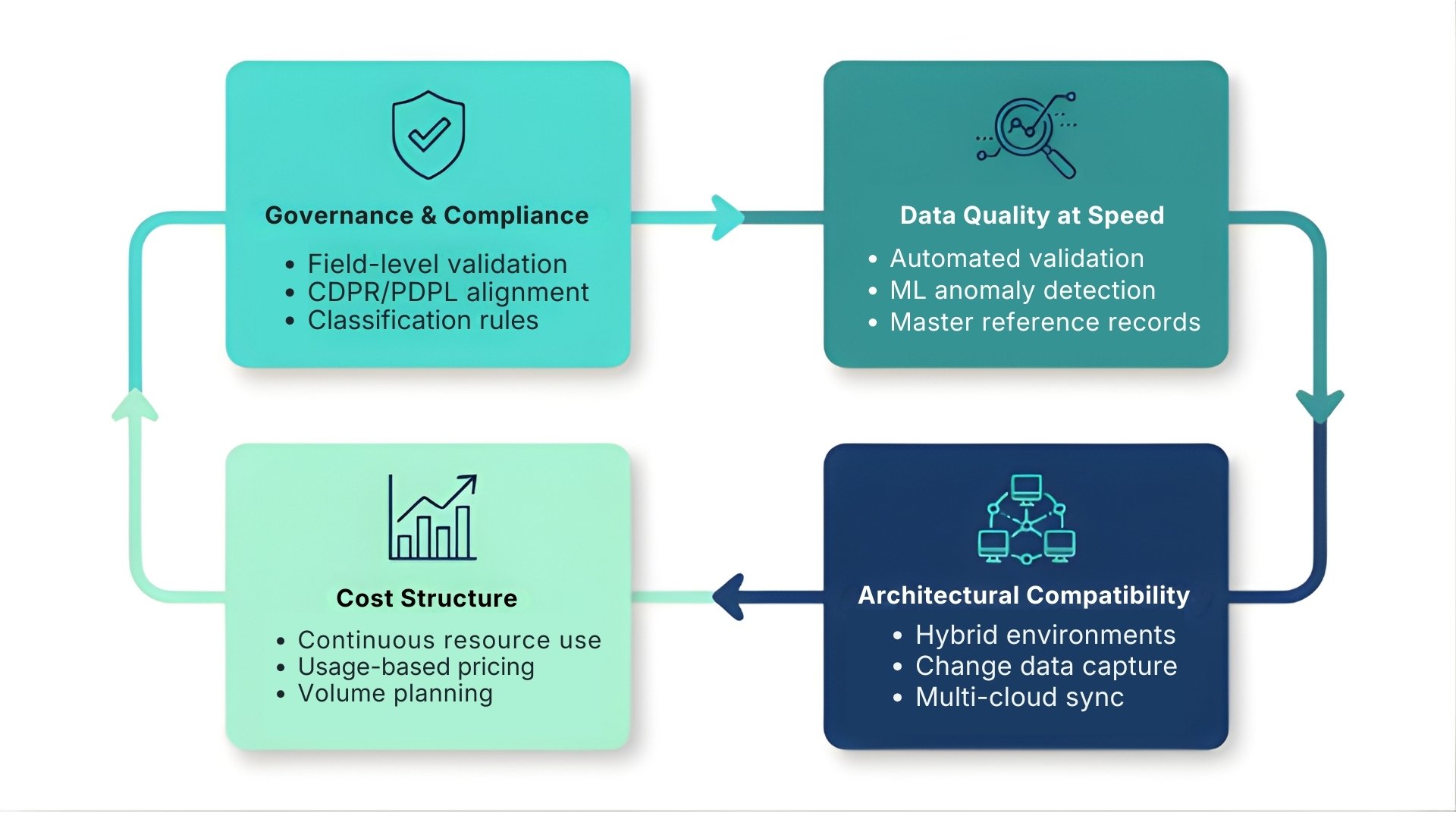

The first consideration is governance. For UAE organisations operating under the Personal Data Protection Law and firms handling EU data subject to GDPR, streaming architectures must include field-level validation at ingestion points. According to Gartner research cited in enterprise case studies, organisations that implement syntactic validation and immediate source feedback prevent up to 38% of downstream anomalies. This requires defining classification rules for information assets based on sensitivity and regulatory requirements, particularly in regulated sectors like healthcare and finance.

The second consideration is data quality at speed. Batch processing allowed time for manual review and correction. Real-time systems must validate, cleanse, and route data automatically. Companies achieving this successfully deploy machine-learning-based anomaly detection to identify outliers in streaming records. The approach requires establishing master reference records and automated matching algorithms, updated regularly against trusted registries.

The third consideration is architectural compatibility. Mid-sized firms typically operate hybrid environments with cloud-native SaaS systems alongside on-premises legacy applications. Integration platforms must support change data capture from legacy systems, Kafka streaming pipelines, and multi-cloud synchronisation strategies. According to research on iPaaS maturation published earlier this year, platforms now offer native support for this hybrid reality, eliminating the need to replace functional systems simply to achieve real-time capability.

The fourth consideration is cost structure. Unlike batch processing where compute resources spike during scheduled runs, streaming architectures consume resources continuously. Cloud-native platforms with usage-based pricing can make this predictable, but organisations need clarity on data volumes and processing requirements to avoid billing surprises.

For organisations based in the UAE, real-time data integration carries specific regulatory implications.

The UAE Data Office, which oversees compliance with the Personal Data Protection Law, has not yet published executive regulations detailing technical implementation requirements. However, the law's alignment with GDPR provides clear direction. Data controllers and processors must maintain detailed records of processing activities, ensure data minimisation and purpose limitation, and implement security measures against unauthorised access.

Organisations in Dubai International Financial Centre and Abu Dhabi Global Market operate under separate data protection frameworks explicitly modelled on GDPR. DIFC's Data Protection Law and ADGM's regulations both mandate immediate breach reporting to the Commissioner. Real-time monitoring and logging become compliance requirements, not optional capabilities.

For firms operating across multiple jurisdictions, the Personal Data Protection Law's provisions on cross-border data transfers matter. Transfers outside the UAE require that the recipient country provides adequate data protection or that appropriate contractual safeguards exist. Streaming architectures that move data continuously across borders must implement these safeguards at the technical level, not just through contractual agreements.

The regulatory environment supports real-time integration as the default approach. Batch processing that delays breach detection, limits audit trail granularity, or prevents timely data subject rights responses creates compliance risk.

The data integration market is projected to grow from $15.18 billion in 2024 to $30.27 billion by 2030, according to Integrate.io's analysis. This growth rate of 12.1% annually seems modest compared to the streaming analytics segment specifically, which grows at 28.3% annually.

That disparity reveals the direction. Organisations are integrating more data, and they are doing it differently, with architectures designed for continuous flow rather than periodic transfer.

For mid-market firms, this transition is about meeting baseline expectations that have shifted. Customers expect order confirmations immediately. Regulators expect breach notifications within hours. Operational teams expect dashboards that reflect current state, not yesterday's close of business.

Batch processing solved real problems when infrastructure limited alternatives. Those constraints no longer exist. The firms that recognise this and act accordingly are not innovating. They are meeting a new baseline.

McKinsey & Company (https://mckinsey.com/~/media/McKinsey/Business Functions/McKinsey Digital/Our Insights/How to build a data architecture to drive innovation today and tomorrow/How-to-build-a-data-architecture-to-drive-innovation.pdf)

Gartner, "Data Integration Strategies and Tools for D&A Leaders", September 2025

Omdena, "Data Analytics Case Study Examples for 2025", November 2025

UAE Government, "Data Protection Laws", accessed November 2025

Meydan Free Zone, "UAE Data Protection Laws & GDPR Compliance Guide 2025"

Chambers and Partners, "Data Protection & Privacy 2025: UAE", 2025

Why do most firms struggle to scale AI despite widespread experimentation? The key barriers and what sets high performers apart in agentic AI...

As technology continues to rapidly evolve, integrating new technologies into your business is no longer a luxury but a necessity for companies aiming...

Part 1 of 5: Closing the Data Gap

Be the first to know about new B2B SaaS Marketing insights to build or refine your marketing function with the tools and knowledge of today’s industry.